Hype, especially in our always-on media age, can distort the importance of any given technological development. There is no doubt that “Artificial Intelligence” (A.I.) and the deep learning algorithms that underpin these approaches are currently ensnared in such a hype cycle.

If we look beyond the hype, humanity is only in the very beginning stages of learning how to apply A.I. approaches. I use the analogy of the first time that you saw Netscape Navigator and thought to yourself, “Huh, this is interesting. I can click on links and see pages. I wonder what this will be useful for?”

While it will take time to separate hype from reality, what will emerge is a set of technological advances with radical, novel capabilities. These capabilities will fundamentally enhance how we use computational approaches in some of humanity’s most critical pursuits.

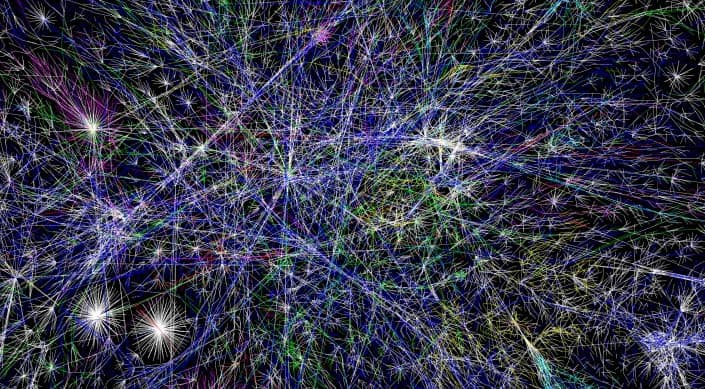

At the heart of these advances is the notion that machines can now learn to recognize patterns, objects and outcomes without being given a set of rules to operate on. We can train these systems on types of concepts and what mentions of those concepts “look” like, and then ask them to find novel instances of those concepts in big data.

The human brain operates in a very similar manner – but this is still a radical notion for computers, because we’ve used rules and “relational databases” to manage data since the dawn of digital computing.

A Brief History of Databases

Edgar F. Codd, a British computer scientist, developed the ideas for relational databases in the 1960s and 1970s. He published “A Relational Model of Data for Large Shared Data Banks” in 1970. We’ve been using these systems ever since.

At the core of the relational database model is the idea of a “schema”. This is the map that is created to tell the computer what sorts of data are in the database and how they relate to each other. Think of it like a collection of spreadsheets that can be joined to create new spreadsheets.

Creating a database is a laborious task. In creating a database schema, a human gives the machine a set of information and tells it how to structure that information. If the person who created a schema leaves the organization though, others must figure out what’s in the schema and how it’s connected. Furthermore, integrating schemas is a nightmare, and the entire process is breaking down as organizations struggle to keep up with the massive amount of digital data being generated.

As a result, we now have what’s called “dark” or “siloed” data – valuable data that is not being used, or that organizations don’t know they have. It is estimated that as much as 90 percent of the data within a given organization is dark or siloed.

Pause, and consider what this means for decision making. Organizations in every industry are making important decisions with incomplete information. There has to be a better way.

A.I. Concept Recognition in Data Sources

Deep learning will help us address our dark and siloed data challenges, because we can now use deep learning techniques to learn our data for us. Like the human brain, A.I. and deep learning approaches allow us to analyze data in its raw form, identify patterns and concepts in the content, and unlock the value of dark and siloed data.

Our brains are limited by the amount of information that we can ingest and consider at any point in time. The world will create 163 zettabytes of data by 2025, according to research group IDC, and it simply isn’t possible for a human to read through every data source of interest for a given decision.

With AI approaches, we can train the machine to “read” the data for us and find the valuable concepts, relationships and parameters and surface those salient sets of knowledge for the human. This idea of an “A.I. agent” capable of reading through vast raw data sources for us is one of the promises of these technologies.

It is important to note though that this does not mean A.I. agents will steal our jobs – in fact, just the opposite is true. A.I. will create new jobs for people who learn to use these systems. Our jobs as humans will move up the value chain, bolstered by powerful A.I. tools to handle our mundane tasks.

Another analogy I often use is that of navigation systems in our cars. Navigation systems help us move through a complex information space, enabling us to get to an end goal faster and more efficiently, with the ability to update and re-route along the way. A.I. systems enable the same types of capabilities through larger and larger information spaces – but like a driver, the human will still envision and achieve the end goal.

Identifying Use Cases for A.I.

A.I. is highly relevant in industries such as the life sciences and healthcare, where many complex variables across disparate information sources need to be brought to bear for insight generation and effective decision making.

Imagine a life sciences organization using A.I. to identify patterns and relationships in cell images, molecular structures and research reports to identify novel molecules or existing molecules with potential for repurposed efficacy.

Imagine a hospital system being able to recognize patterns in readmissions or novel conditions occurring in patient populations.

Imagine a patent attorney able to use AI systems to find patterns of information relevant to precedence evaluation.

Beyond the hype, there is incredible, untapped potential for deep learning technologies to help change the lives of millions. Our understanding of A.I. starts with separating hype from reality, and investing in the development of technology platforms that address valuable use cases and harness these game changing capabilities.